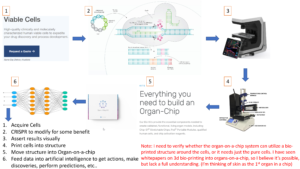

WHAT IS THE REVERSE MATRIX, AN OVERVIEW

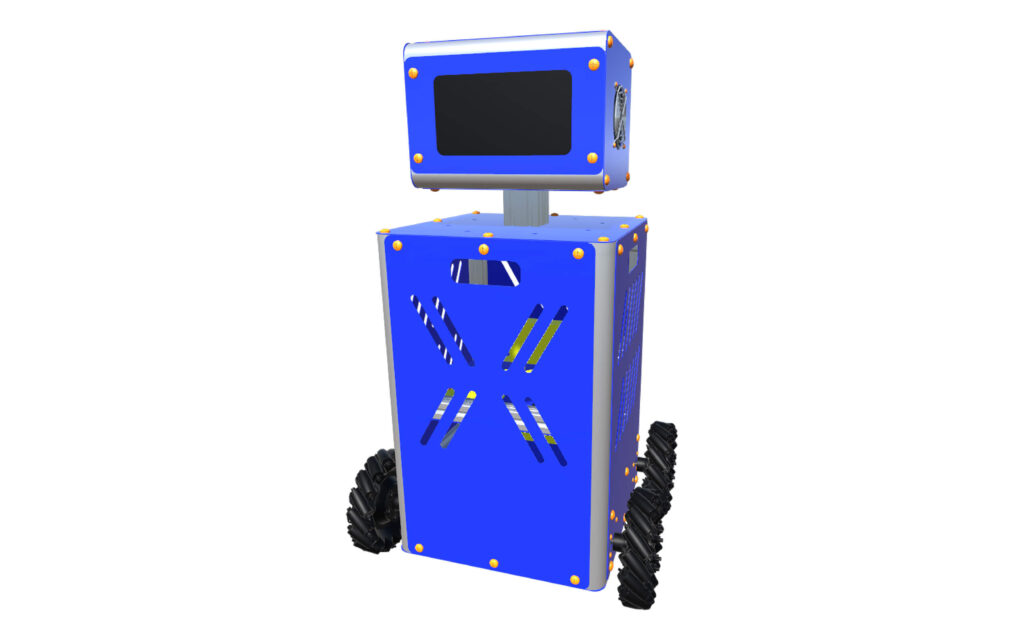

With the advancement of physics engines, machine learning and virtual reality, a host of new opportunities have become available for how our technology can learn, understand and carryout behaviors. Typically, we employ systems that use software as a learning environment to carryout behaviors through software. In other words, software learns to do software things. In this research, I have achieved the ability to develop and learn the optimal actions in a software/virtual reality environment, and then deploy those capabilities to a physical entity, a robot (depicted above) where the robot then carries out the actions in the physical world.

I have called this project the “Reverse Matrix”, as in the Matrix movies (the Matrix is a popular set of movies that depict a world where the humans believe and appear to be living standard typical lives, however, they are in fact plugged into vats that extract their energy as batteries for the machines, that have taken over the earth, to use) the typical idea is that we learn and operate in a virtual reality. Although it is true that Neo (the hero of the movie) figures out how to carry some special powers into the real world from the Matrix, that is not the norm. Anyhow, my project has been the reverse of this, and is basically determining if we can learn from a virtual representation of our world and then use that knowledge in the real world.

A DETAILED LOOK INTO THE REVERSE MATRIX

Below is a screenshot taken from inside of the virtual reality environment the physical robots learn from. Let’s breakdown the scene to give a better understanding of what this project is and what has been accomplished. The first thing to notice is that there are color coded actors in humanoid forms, and then a lesser number of larger robotic actors, also color coded. These colors represent teams working together to solve problems. The problems they are solving are shown on the problem/terminal/answer cubes. These cubes provide a problem statement, an answer (which is also the points scored for solving the issue) and 4 python terminals the humanoid actors can access and use. Yes, you heard correctly, there are computer terminals here that allow python programming. Basically, the terminals are like little computers the actors can use, and this is how they try to solve the problem statements. If the output of their python program matches the problem statement number then they are awarded that number of points to their team.

Now, let’s talk about the larger colored robots. There are 1 per colored team in the scene below, although there can be more. The larger robots are ‘Observers’ and play a very special role containing two objectives. Objective one is to record the advancements made in a human friendly format where a human could take that output and use it to create a new system utilizing the advancements or upgrade an existing system using them. Typically, this is just a simple document in step format, however, my latest versions use a chatbot interface with context allowing a standard set of topics to be used to have deeper conversation around the advances and overall behavior of the actors. The goal here is for the observing robots to be able to offer far more insight than just a list of steps both on the advancements and the failures. The observers are like a technical writer and a neural network phycologist wrapped up into a chatbot and ready to talk about both set of topics. The second objective is simply the ability to shutdown any of the actors code/behaviors/actions if needed. The observer has the advantage of knowing how the learning actors have advanced, allowing it the ability to shut any of them down if needed, even if they become complex, tricky, etc… To summarize, an observer allows humans to utilize the advances in existing or new systems and understand the failures of the actors learning and achievements, and the observer can stop any parts or the whole system if needed.

USING THE VIRTUAL IN THE WORLD OF THE PHYSICAL

Now, we get to the interesting part, the ability for a physical entity to post it’s objectives to a virtual environment, and utilize the output in the physical environment. To achieve this, I created a virtual environment to match my physical location and one of my robots. I used a very basic small room to start with a maze inside of the room. The goal of the system was for the robot to use actions based on environment from the virtual system entirely, and not rely on any of it’s own capabilities to find the best path through the maze. First, I created the request to solve the maze from the physical robot to be sent into the virtual environments objectives list. Next, I sent over the Robot’s capabilities, and in the virtual world the actor of the robot was then placed with the appropriate actions. Then, I added a new capability I often use in machine learning I like to call “AI imagination” which requires a large number of cloned environments and actors, so I created them as needed resulting in many virtual worlds. (you can see other articles on what that is elsewhere on my site, but in short, it’s the ability for an AI to duplicate it’s environment times it’s possible actions, then evaluate each one for feasibility, then the output of only the highest reward generating actions per state are sent to the machine learning system. This is a revolutionary departure from how we do machine learning today and is a major factor of my self improving emergent AI.) The system is now ready to run, so it does, and after all the instances have completed, the best action set is sent to the physical robot where it uses the actions to solve the maze in the fastest, most effective manner.

-

Image of the Real Physical Robot -

Image of the Same Robot as it Appears in the Virtual World

IN SUMMARY

When I think about the larger scale rollout of this approach, I see implications of revolutionary new capabilities in everything from IoT, robotics, self driving all the way to mind linked humans, animals and other use cases. Even the components of this solution bring massively valuable capabilities not previously possible, for example, in my earlier iterations of the virtual world I created large sets of actors that learned how to code in python. It was a long progression to get them started, but I reached a point where they became emergent, and their capabilities advanced beyond their initial instructions. (please read about my self improving, self generating AI to understand more on this as the capability of emergence in AI, in my opinion, is my greatest achievement, and it will, given the proper type and amount of data, rewrite everything else I’ve done with superior results in ways I could not comprehend/foresee personally.) The interesting thing is that the data and how I set up the objectives were far more important than any particular algorithm, code or library. The actors had to first be coaxed into learning how to create a file on the filesystem, then read files, then create a python file, then get a python file to run with an error, then start learning basic math operations in python. However, once the actors could create and execute python scripts that used math operations in them, and returned the final values, they on their own advanced to start solving the problem cubes.

This research project was highly valuable and interesting and solidified a bridge between the virtual and the physical. It also created a ‘school’ where virtual actors can learn math and coding, and use those skills within the virtual environment to solve problems. My plans for this technology are to advance the coding capabilities of the actors to exceed the capabilities of human programmers, and then provide an easy to use interface, probably text at first, then drag and drop, and eventually verbal commands, (like the Alexa or Google of coding, i.e. “Autocoder – create a predictive neural network for column x in spreadsheet “numbervalues”, use spreadsheet “todaysvalues” as the input, output results to my email”). That’s the thing about technology, it’s highly predictable in not only where we will advance, but how people will want to use it, so we can create the usability we know we will want tomorrow, but make it a reality today.

Thanks for reading,

Trevor E. Chandler